This article is for you if you are an IT administrator, educator or a tech savvy person who has to deal with different types of audio/video technology and wants to better understand what is involved in, say, connecting VGA to HDMI.

The article will explain the difference between such formats as VGA, DVI, HDMI, and DisplayPort and the most effective ways to connect different kinds of technology in the most effective and cost-efficient ways.

VGA, DVI, HDMI and DisplayPort are computer display standards. A standard is typically associated with certain video connectors, expansion cards and monitors.

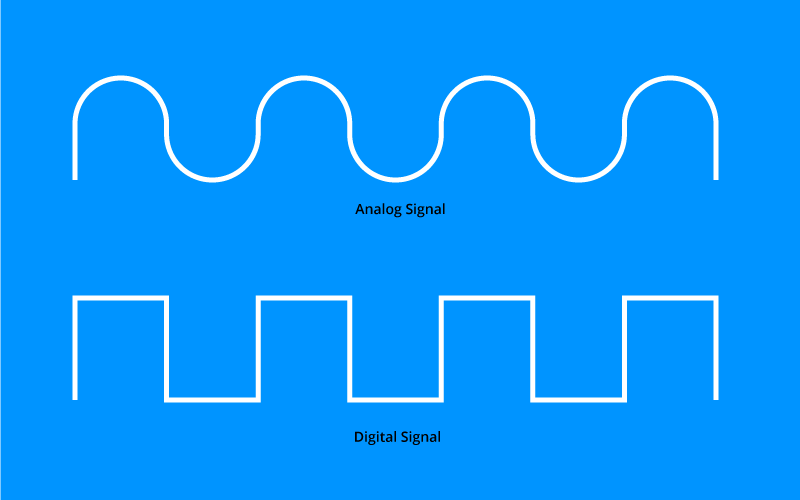

Digital signals have two main benefits over analog signals.

The first benefit is that digital signals can carry more information. Since an analog signal is continuous, it is impossible to throw information out of it even if it is not necessary. A digital signal is discrete and can contain only the needed information.

The second advantage of digital signals is that they are better at maintaining quality. With analog signals, if a signal is amplified, the noise is also amplified. While noise can add random information to a digital signal, too, the electronics can ignore the noise. This means that the quality of the signal can stay consistently high.

Analog to digital converters replace each real number from the analog signal with a number from a finite set of discrete values.

VGA is short for Video Graphic Array. Originally, it described display hardware manufactured by IBM and used in IBM PS/2 line of personal computers starting from 1987.

Fundamentally, VGA standard became the lowest possible denominator for all PC graphics hardware. The original VGA standard specifications were up to 800 horizontal pixels and up to 600 vertical lines with 16-bit or 256-bit color and 256 kilobytes of video ram.

Today, most manufacturers have switched to other standards and do not use the VGA analog interface (not to be confused with the original VGA screen resolution standard) with the blue 3-row 15-pin VGA connector. However, there was a time (around 2010 or so) when manufacturers were using VGA analog interface for the transmission of high-definition video.

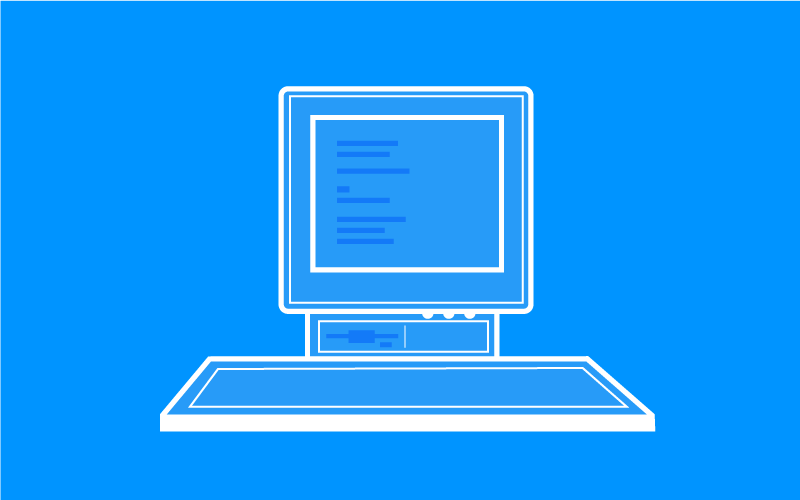

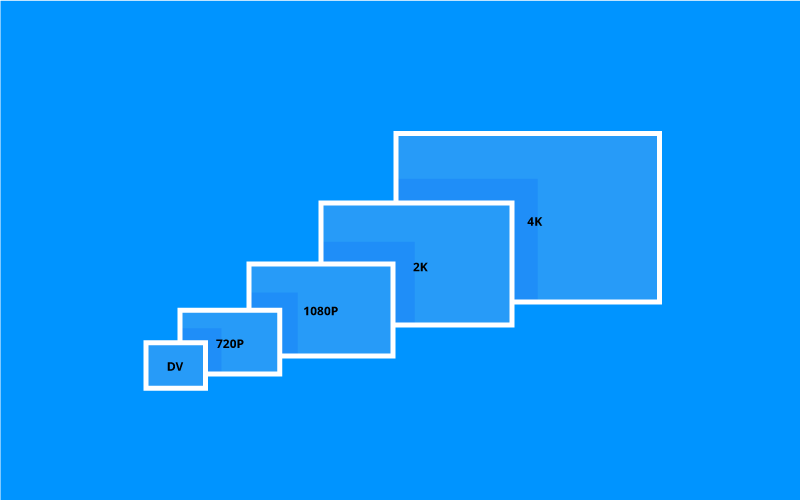

The term high-definition applies to video that has 720 horizontal lines or more and an aspect ratio of 16:9. It includes formats such as 1080i, 1080p, 1440p, 4K UHDTV and 8K UHD.

When you connect an old computer to a newer TV and go from, say, VGA to HDMI, it is possible that a picture from your device will not be able to scale to fit the screen of the TV entirely or you will have a mismatch between aspect ratios. If this happens, you have several options. You can try and find a setting that works for both the computer and the TV, show only a part of the picture on the TV screen, or have black bars at the sides of the TV.

In North America, most common standard definition video signal has 576 lines and 50Hz refresh rate.

There are no standards that describe the connection between quality of the video and the interface. Typically, to transmit higher quality signal, you will need a higher quality cable with coaxial wiring and insulation.

The phasing out of the VGA chipset support and switching to digital alternatives started to occur around 2010. Large manufacturers, such as Intel and AMD, stopped supporting the format by 2015. This means that if your computer or a TV screen was manufactured around 2010, it is likely to have a VGA connector.

If you bought a computer or a TV after 2015, it probably has a connector of one of the different formats you will read about below.

DVI is short for Digital Visual Interface. It was created in 1999 by Digital Display Working Group with the goal of transmitting uncompressed digital video between displays and display controllers, such as computers and gaming consoles. Notice that the term DVI applies only to a video display interface. Unlike VGA, the term was never associated with screen resolutions or computer display standards.

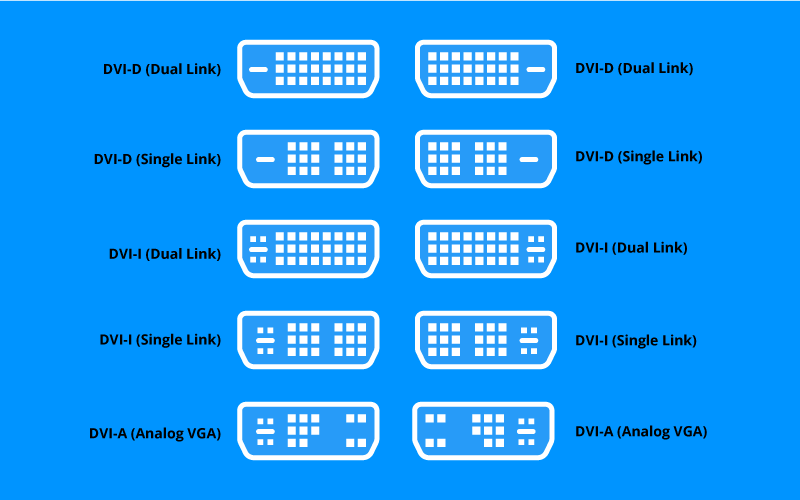

There are several types of DVI connectors. The type of connector depends on what kind of signal the connector carries.

DVI-I combines digital and analog signals in the same connector.

DVI-D carries digital signals only (hence the letter D in its name).

DVI-A carries only analog signals.

Intel and AMD made the announcement about stopping their support for DVI at the same time they announced phasing out the support for VGA in December of 2010. The timeline was also the same for VGA and DVI. Intel and AMD planned to complete the transition by 2015.

HDMI is short for High-Definition Multimedia Interface. It is a proprietary interface that combines the transmission of both digital audio and digital video from an HDMI-compatible source.

HDMI became a de facto standard for the transmission of digital signals. HDMI forum is an organization that was created in 2011 to allow interested parties participate in the development of future HDMI specifications. The forum includes members such as Intel, AMD, Bose, Texas Instruments, Netflix and others.

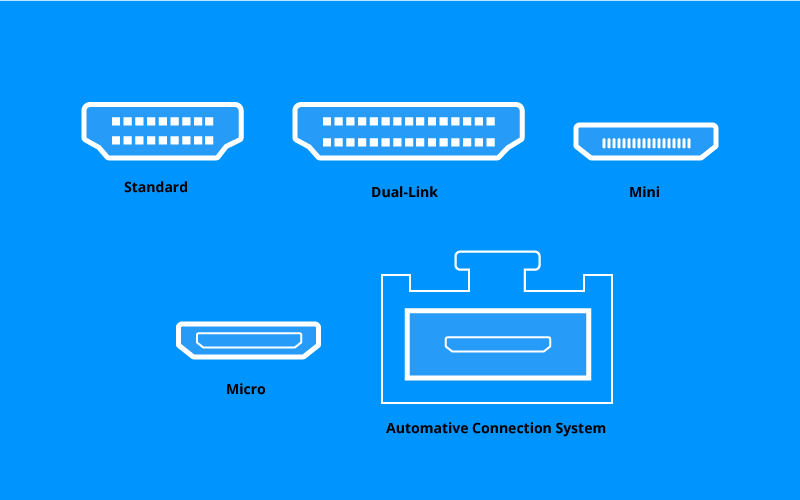

There are five types of HDMI connectors. They are described in the HDMI specifications HDMI 1.0 Specification through HDMI 1.4 Specification. These documents are created by the HDMI forum. As of August 2017, the forum has announced HDMI 2.1, but most of the information in it is about devices that will see the market in 2018 and later.

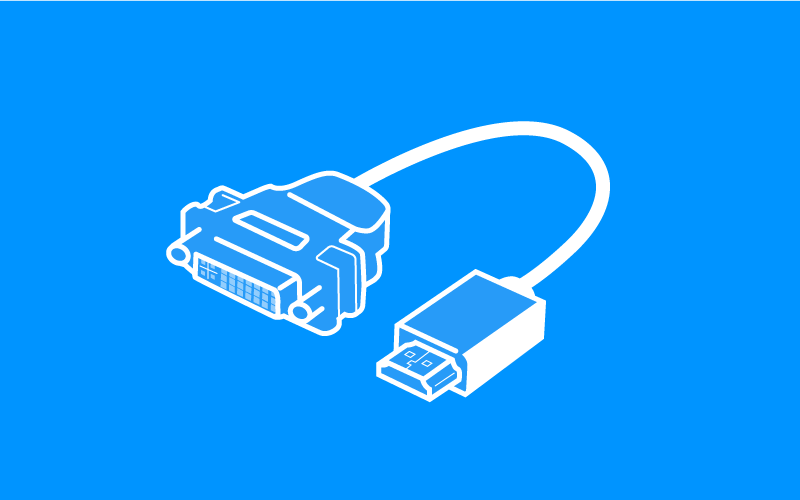

HDMI is fully compatible with single-link DVI. For this reason, all you need to connect an HDMI device to a single-link DVI is a simple adapter.

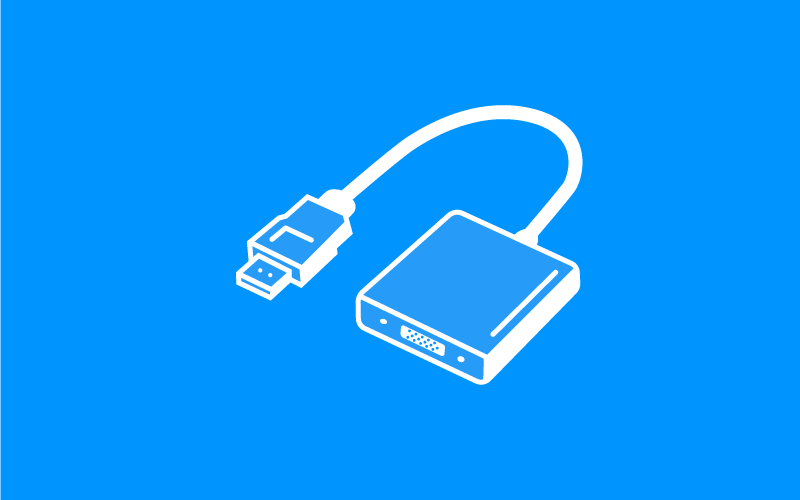

There are two most important differences between VGA and HDMI interfaces. The first one is that VGA is an analog interface. HDMI is a digital one. The second difference is that VGA is a video interface and HDMI includes both audio and video. For this reason, you will need not just a cable when connecting a device with a VGA interface to a device with a HDMI interface, but an adapter.

The good news is that such adapters are small in size and very inexpensive. You can get one for about $20.

Typically, devices with the VGA interface have lower video resolution compared to modern HDMI devices. When it comes to computers with VGA interface, lower video resolution is a result of video card limitations. When it comes to older displays with VGA interface, there’s usually an issue of screen resolution limitations.

DisplayPort was created in 2006 by Dell and later standardized by the Video Electronics Standards Association (VESA). Just like HDMI, it was introduced to replace VGA and DVI standards. Also just like HDMI, DisplayPort is a digital interface that can transport both audio and video.

The biggest difference between DisplayPort and HDMI for end users is that various sizes of HDMI cables and connectors are available.

DisplayPort has just one cable and only two types of connectors: Standard and Mini.

Wireless streaming with HDMI is a term that describes a way to transmit audio and video signals from a device (for example, a computer or a smartphone) to a screen such as a TV without any cables. If you’re interested in hearing more about the technology behind wireless streaming with HDMI, check out our other article Your guide to wireless streaming for HDMI.

Take a look at our comparisons to get a bigger picture on how our wireless solution stacks up against the competition.