Great success comes at an even greater expense. Here’s a lowdown on how Infrastructure team improved the operational costs of the Airtame Cloud environment

Great success comes at an even greater expense. Here’s a lowdown on how Infrastructure team improved the operational costs of the Airtame Cloud environment

Airtame Cloud was created as a simple service to improve the ergonomics of managing a large number of Airtame devices. The number of active users is quickly rising, and soon enough we started to encounter scalability and runaway cost issues related to the infrastructure of our Cloud product.

Our Cloud is fully backed by AWS services. Airtame devices communicate with the Cloud via secure websockets. Usage of websockets results in a large number of active connections, usually around 10k. Websockets are used to synchronize settings between the Cloud and Airtames.

The size of the exchanged data is relatively high. Devices send pictures of dynamic dashboards, which are displayed while the Airtame device is not streaming.

If we gather all these facts we start to see that Cloud costs are related to the increasing number of users. This was a good starting point for us.

Our infrastructure hasn’t changed much over the last few months, the only change was the number of users – which is, of course, a great problem to have!

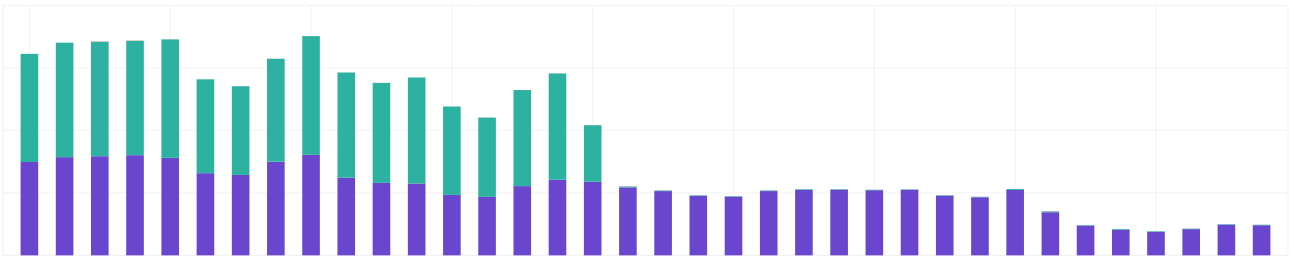

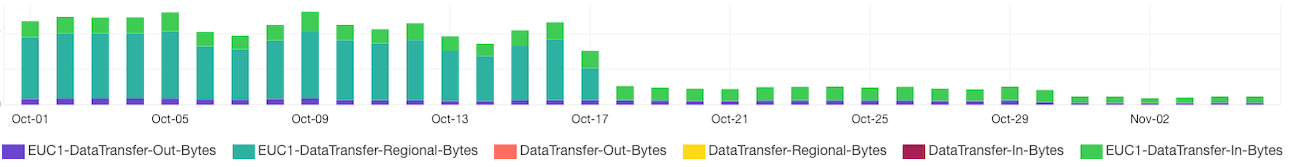

We discovered the problem quite quickly: we were running server instances in multiple availability zones (AZ) inside one region. From our billing information, we could see that we were transferring around 2200 GB per day. 1900 GB inside AZs and 300 GB to the internet. Prices for transferring to the internet are 10x higher than between AZs. Costs for transfer between AZs should be zero.

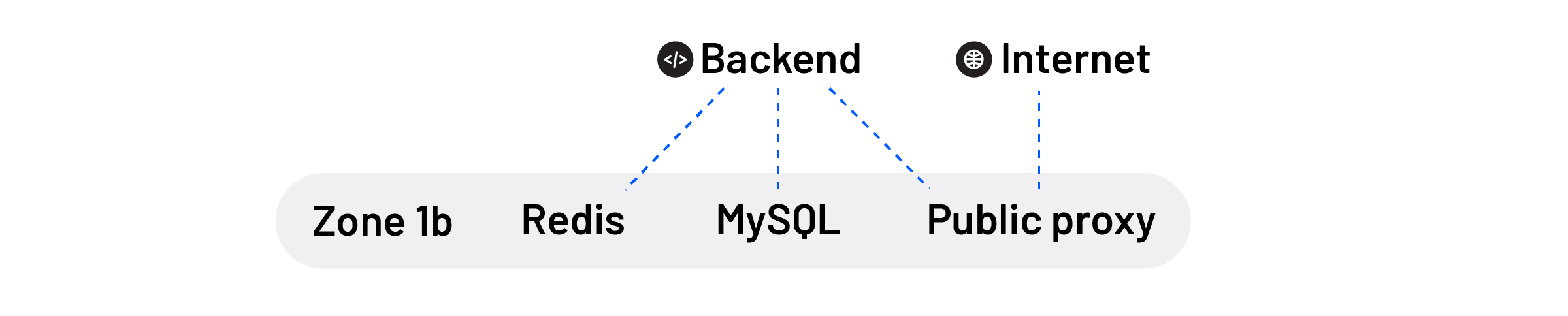

We started exploring our internal Cloud network in AWS Console. As an entry point to our Cloud, we use Nginx public proxies. Only our public proxies are accessible from the Internet, and they purpose is simple: They serve static data and proxy API request to a backend instance. Public proxies were running in AZ 1b.

The backend does the heavy lifting and processes API requests. Unfortunately, the backend instances were hosted in AZ 1a. This means that all API requests were transferred between AZs.

To handle requests, the backend additionally connects to MySQL and Redis. And only to make things worse, MySQL and Redis were in AZ 1b, resulting in additional transfers to the first zone and then sending results back the same way requests came in.

Once we figured out the root cause of our problem, we came up with a plan to move everything to the same AZ. Moving so many servers between AZ wasn’t easy, and we caused only a few outages along the road.

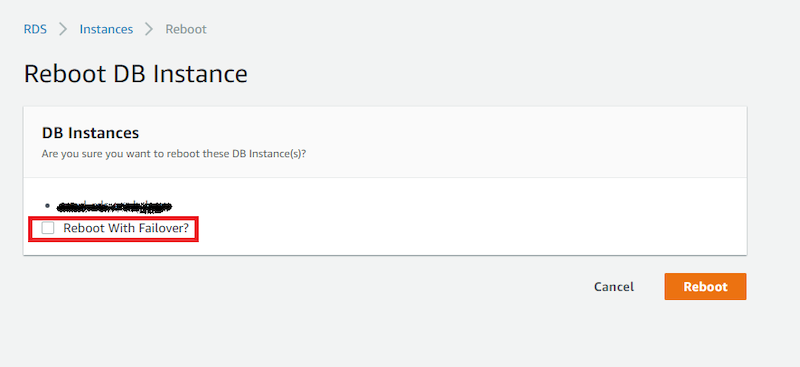

We were able to move RDS from one AZ to another without downtime with this neat trick: First, we enabled multi-AZ deployment and then used reboot with failover to switch primary DBs. AWS guarantees that DBs in multi-AZ are consistent and can be used interchangeably.

We took advantage of an interesting side effect of a reboot with failover, which is primarily used for DB updates. It first switches primary RDS to failover DB and then performs a reboot, resulting in zero downtime.

dbtechno.blogspot.com

Now the real question: how did this happen? Terraform, our infrastructure as code software, is a good servant, but a bad master. Its defaults can be dangerous. We didn’t specify AZs in Terraform’s configuration and as a result, it has chosen random AZs. The easy fix was to define AZs for all resources.

We’ve successfully minimized costs of inter-AZ transfers to zero. This significantly reduced the cost of our Cloud network cost by 63%. This price reduction – not to mention the resource and time surplus – will be fed back into our production line; creating an even more sustainable Cloud environment for our growing user base.

Watch this short video showing how the Airtame Cloud management works:

This piece was written by Peter, with additional writing from Raghav Karol and Jakob Bauers from the Airtame infrastructure team