The core of Airtame’s cross-platform streaming software is responsible for capturing a computer’s audio and video output, then encoding and transferring them wirelessly to the Airtame dongle, where the stream is decoded and rendered.

The codebase consists of ~20K lines of C, plus a bit of Objective-C and C++, and it is currently being developed by a team of four engineers. Over the past few months, we have focused on stabilizing our software and improving our engineering processes.

This post discusses some of the tools and processes we’ve utilized in an effort to maintain a high standard of code quality and evade the proverbial software tar pit.

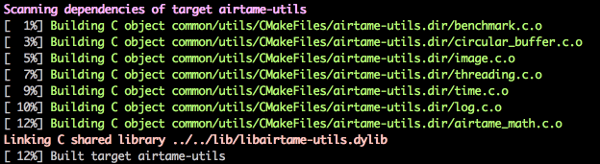

Our first line of defense against Bad Code™ is compiling with the -Werror and -Wallflags, which cause compilation to fail in the presence of any warnings. This helps our engineers catch and fix simple mistakes very early in the development process. There’s also something very satisfying about watching dozens of source files being compiled without a single complaint from the compiler.

Airtame’s core compiles warning-freeWe use Gerrit for code reviews and Jenkins for continuous integration. When a developer pushes a change to Gerrit, several Jenkins jobs are automatically triggered to analyze our code and provide feedback on the changes.

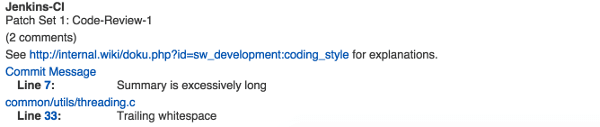

The first of these jobs executes Qt’s Simple Sanitizer, which ensures that Git commit messages are well-formed and that committed code does not contain any trailing whitespace characters or conflict markers, among other things.

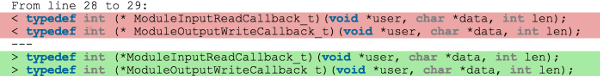

While we don’t believe in one definitive “right way” to format C code, we do feel that it is beneficial to use a consistent coding standard (ours is heavily inspired by the Linux kernel coding style). To enforce our coding standard, we run LLVM’s clang-format, passing its output to a custom Python script which produces an HTML report.

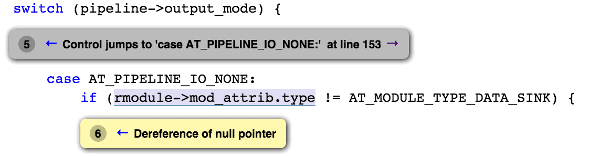

The next code quality check is performed by LLVM’s scan-build, a deep static analyzer with a very nice Jenkins plugin. The beauty of scan-build is that it analyzes all possible paths through the code, enabling us to discover bugs before they materialize as runtime errors. We’ve found that scan-build is particularly good at detecting memory/resource management issues, especially those which are hidden in rarely-triggered edge cases.

At Airtame, automated testing is deeply ingrained in our engineering culture. Every change pushed to Gerrit causes our automated test suite to be executed on Windows, Mac, and Linux. This includes cmocka unit tests of individual modules, as well as pytest-driven end-to-end system tests.

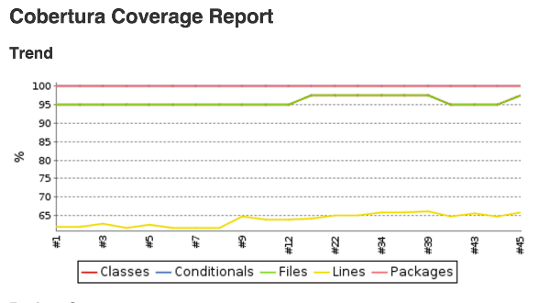

We use gcov to measure test coverage, passing its output to gcovr to produce a Jenkins-friendly XML file, which allows us to visualize test coverage over time. Our automated tests are absolutely essential to maintaining software quality during rapid development – they have saved us countless hours of manual testing and are especially useful for exposing platform-specific issues.

We additionally run end-to-end tests through Valgrind and its Jenkins plugin in order to detect memory and threading errors. This is the last of our automated quality checks, and if it succeeds, a +1 Verified vote is applied to the Gerrit change, indicating that it is ready for peer review.

Code reviews are another integral component of Airtame’s engineering culture. While we won’t dive too deeply into this topic (it could easily warrant its own blog post), suffice to say that we take reviews very seriously and do our best to detect “code smells” as early as possible. After a change has been peer reviewed, it can be merged via Gerrit. If it passes our manual quality assurance checks, the change is pushed to our alpha testing group, before being promoted to beta, and finally, general availability.

This is just the beginning of Airtame’s code quality journey. As our codebase grows in size and number of contributors, we’ll need to continue adapting our tools and processes.